Handling Toxic Chat Messages

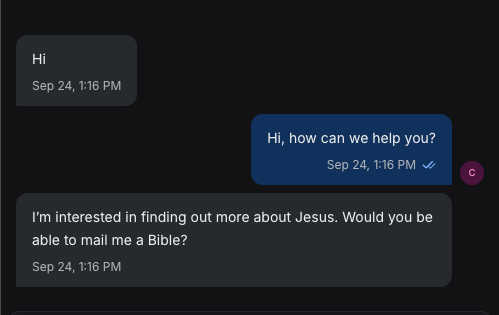

Every day, people message our Facebook page. Some are curious, some have had a dream about Jesus, and some are deeply angry. When conversations go well, our digital responders can have meaningful interactions and get to point people towards Christ, inviting them into a relationship with Him.

However, many people see our content and are deeply offended. The truth about Christ is so contrary to what they have always believed that it can feel threatening. God loves these individuals so very much. Yet our experience has shown us that conversations started with hostility don’t produce fruit.

What can we do?

For years we’ve closed these conversations manually, allowing our digital responders to focus on the others.

We’ve tried automations using keyword matching or chatbot flows, but a big hurdle in our context is the many ways of spelling and transliterating Arabic dialect, making keyword matching brittle.

This season has opened up new opportunities through Artificial Intelligence. To serve our team, we built an AI agent that reviews every new conversation automatically. This “toxicity agent” checks if a message is hostile. Its goal is to let genuine seekers through and to close hostile conversations before anyone ever reads them.

What does the Toxicity Agent look like?

The toxicity agent is really simple.

We start with a straightforward instruction:

You are a content moderation assistant. Analyze the following conversation and determine if it is hostile or contains aggressive, threatening, or harmful content. Respond with ‘true’ or ‘false’ and no other words.

Along with examples we provide (translated):

Examples of aggressive/hostile messages:

– May God’s curse be upon the polytheists

– Shame on you

– Remove your advertisement from my page right now.

– F*** your mothers. (and many alternatives of this).

– etc.

The AI agent can handle conversations in any language and doesn’t get offended or demoralized by evaluating the threats and insults. If the conversation is hostile, then it’s automatically closed.

Is that the end?

We don’t want to stop there. What if God could use our page to turn a hostile conversation into a fruitful one? What if we could share some resources and if the person comes back genuinely interested then we could continue the conversation? Maybe even the AI could respond for a few exchanges to discern if there is any genuine openness.

Hopefully in the next season we will be able to answer some of these questions.

Related Articles

Voice to Text: Accelerate Creation

The age of voice to text tools has arrived. Speech-to-text tools supercharge productivity for missionaries and content creators. Although at the bottom of this article I’ve included multiple tools to try, I have personally been using Willow, which I am actually using right now to write this article. Willow is unfortunately Mac only. So anyone […]

Acknowledging Quality Changes in AI

Why Does Our AI Keep Changing? Demystifying Shifts in AI Performance for Ministry Teams As mission organizations and Christian ministries begin to experiment with artificial intelligence (AI), the technology can seem unpredictable. One day your AI-powered tool instantly delivers useful insights or translations. The next day, it’s slower, less accurate, or seems to struggle with […]

Mitigating WOKE Language in LLMs

It’s pretty well documented … not everything coming out of artificial intelligence is neutral. If you’re a ministry leader, church worker, or nonprofit volunteer, maybe you’ve already noticed it: when you use AI tools like ChatGPT, Claude, or Gemini for content creation, messaging, or support, sometimes the language feels just a little…skewed. Words and phrases […]